Introduction: From Data Sovereignty to Algorithm Sovereignty

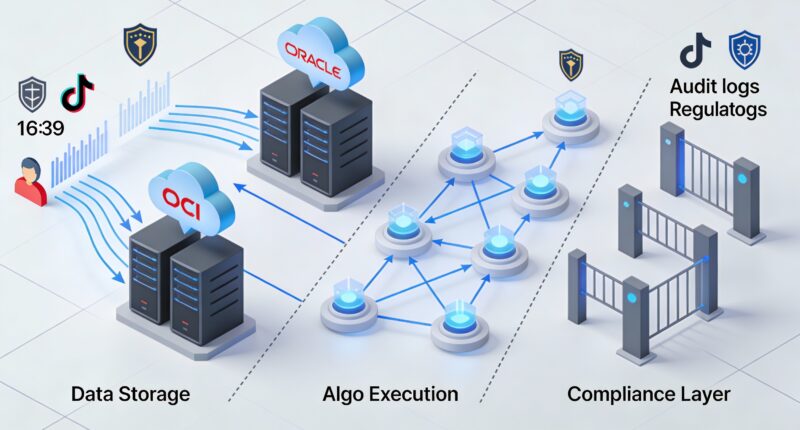

For years, debates around TikTok have focused on data sovereignty—where user data is stored, who can access it, and under which national laws it falls. Oracle’s expanded role in TikTok’s U.S. operations signals a more profound shift: the emergence of algorithm sovereignty. This concept goes beyond data custody to address who governs the recommendation systems that shape attention, culture, commerce, and political discourse.

TikTok’s algorithm is not just a piece of software. It is the core product. It determines what creators see, how audiences form tastes, and how trends, narratives, and economic value propagate across the platform. As Oracle gains deeper control over TikTok’s infrastructure, review processes, and potentially model deployment pipelines, the user experience itself—what videos surface, how fast trends travel, and which creators break through—may subtly but materially change.

This article examines what algorithm sovereignty means in practice, how Oracle’s control could reshape TikTok’s experience, and why this shift matters to creators, users, regulators, and the future of global platforms.

What Is Algorithm Sovereignty?

Defining Algorithmic Control

Algorithm sovereignty refers to jurisdictional and institutional authority over how algorithms are trained, audited, deployed, and updated. Unlike data sovereignty, which concerns storage and access, algorithm sovereignty governs decision-making logic itself.

In TikTok’s case, the recommendation algorithm determines:

- Content ranking and visibility

- Trend formation and decay

- Monetization pathways for creators

- Exposure to news, politics, and social issues

Whoever controls the algorithm effectively controls the platform’s social and economic outcomes.

Why Governments Care About Algorithms

Regulators increasingly recognize that algorithms are not neutral. They encode incentives, values, and risk tolerances. Concerns include:

- Information manipulation

- Foreign influence operations

- Cultural and political amplification effects

- Youth safety and content moderation

Algorithm sovereignty allows governments to assert oversight without banning platforms outright.

Oracle’s Role in TikTok’s U.S. Stack

From Cloud Provider to Governance Partner

Oracle’s involvement began with hosting U.S. user data on its cloud infrastructure. Over time, its mandate expanded to include:

- Secure data pipelines

- Controlled access environments

- Third-party audits and compliance reporting

Crucially, Oracle is positioned as a trusted U.S. intermediary between TikTok’s Chinese parent company and American regulators.

Algorithm Review and Deployment Controls

While Oracle does not “own” TikTok’s algorithm, control over:

- Model deployment environments

- Update approval workflows

- Logging and observability systems

creates leverage. If algorithm updates must pass through U.S.-based infrastructure and review mechanisms, then effective sovereignty over how the algorithm evolves shifts accordingly.

This is not direct censorship or redesign, but procedural control—deciding what changes are acceptable, auditable, and compliant.

How Algorithm Sovereignty Changes the TikTok Experience

Subtle Shifts, Not Sudden Overhauls

Users should not expect an abrupt redesign of TikTok’s feed. Instead, changes are likely to be incremental and statistical rather than visible.

Potential areas of impact include:

- Reduced volatility in trend amplification

- More conservative handling of political or civic content

- Increased weighting toward advertiser-safe engagement signals

These adjustments alter the “feel” of the platform over time rather than through a single update.

Content Moderation Meets Recommendation Logic

Traditionally, moderation and recommendation are treated as separate systems. Algorithm sovereignty collapses that distinction.

If regulators require demonstrable safeguards, recommendation systems may:

- Down-rank borderline content rather than removing it

- Limit rapid virality in sensitive categories

- Introduce friction into trend propagation

For users, this can feel like a calmer, more predictable feed—less chaotic, but potentially less explosive.

Implications for Creators

From Algorithm Hacking to Compliance-Aware Creativity

TikTok creators have long optimized for perceived algorithmic signals: watch time, completion rate, and rapid engagement bursts. Under algorithm sovereignty, these signals may be rebalanced.

Creators could see:

- Greater emphasis on sustained engagement over shock virality

- Reduced rewards for borderline or polarizing content

- More consistent distribution for compliant content categories

This favors professionalized creators and brands over experimental or controversial voices.

Monetization and Brand Safety Alignment

Oracle’s governance role aligns TikTok more closely with enterprise advertising norms. Expect:

- Tighter coupling between recommendation and ad suitability

- Greater predictability for brand partnerships

- Slower but more stable creator income trajectories

For some creators, this reduces upside volatility. For others, it limits breakout potential.

Implications for Users

A More “Regulated” Discovery Experience

TikTok’s defining feature has been radical discovery—anyone can go viral overnight. Algorithm sovereignty introduces guardrails.

Users may notice:

- Fewer extreme content swings

- Less sudden exposure to sensitive topics

- More repetition within interest clusters

The feed becomes safer and more legible, but potentially less surprising.

Trust Versus Excitement Trade-Off

From a policy perspective, algorithm sovereignty aims to increase trust: trust that the platform is not manipulated by foreign actors, and trust that harmful amplification is controlled.

The trade-off is reduced algorithmic risk-taking. TikTok’s challenge is preserving its addictive discovery engine while satisfying governance constraints.

The Geopolitical Dimension

Algorithm Sovereignty as a New Trade Barrier

By asserting algorithm sovereignty, the U.S. effectively raises the cost of operating global platforms without local compliance.

This creates a precedent:

- Platforms must localize not just data, but decision logic

- Algorithms become subject to national review standards

- Cross-border model deployment becomes fragmented

This is a form of digital industrial policy.

Fragmentation of the Global Internet

If other countries adopt similar requirements, platforms may need multiple algorithmic variants:

- A U.S.-compliant recommendation system

- A Europe-compliant system under the DSA

- Separate models for other regulatory regimes

The result is a splintered algorithmic internet, even if the interface looks identical.

Oracle’s Strategic Advantage

Infrastructure as Power

Oracle’s value is not creative or cultural—it is institutional trust. By embedding itself into TikTok’s operational core, Oracle becomes:

- A gatekeeper of compliance

- A mediator between state and platform

- A model for future “trusted cloud” arrangements

This positions Oracle as a strategic player in digital governance, not just enterprise IT.

A Blueprint for Other Platforms

If TikTok’s arrangement proves durable, similar structures could emerge for:

- AI model hosting

- Recommendation engines in social platforms

- Cross-border consumer apps

Algorithm sovereignty may become a standard requirement for global scale.

Risks and Unintended Consequences

Over-Standardization of Culture

When algorithms are constrained by regulatory and enterprise norms, cultural expression can narrow. Viral creativity thrives on edge cases and unpredictability.

Excessive governance risks:

- Homogenizing content

- Favoring incumbents over newcomers

- Reducing cultural diversity

This is difficult to measure but important to acknowledge.

Transparency Without Understanding

Algorithm audits often produce documentation without genuine public comprehension. Users may be told the system is “safe” without understanding how it shapes their behavior.

Algorithm sovereignty should not become a black box with a government seal of approval.

The Future of Algorithm Sovereignty

From Exception to Norm

What began as a TikTok-specific geopolitical compromise may evolve into a standard model for platform governance. Algorithms that shape mass behavior are increasingly viewed as infrastructure.

Future debates will focus on:

- Who defines acceptable algorithmic behavior

- How much innovation can occur under oversight

- Whether sovereignty can coexist with global creativity

What This Means for TikTok’s Identity

TikTok’s success came from its frictionless, hyper-optimized algorithm. Oracle’s control does not end that model, but it civilizes it.

The platform moves:

- From insurgent disruptor to regulated incumbent

- From pure growth optimization to balanced governance

The TikTok experience will remain engaging, but it will be less wild—and more accountable.

Conclusion: Control Without Ownership

Algorithm sovereignty represents a new phase in the relationship between technology, state power, and culture. Oracle’s role in TikTok demonstrates that control does not require ownership. By governing infrastructure, deployment, and compliance pathways, a trusted intermediary can reshape how an algorithm behaves without rewriting its code.

For users and creators, the changes will be subtle but cumulative. For regulators and platforms, this model offers a way to manage risk without fragmentation through bans. And for the global internet, it signals a future where algorithms are no longer borderless—even when the app in your hand looks the same.

Algorithm sovereignty is not about stopping TikTok. It is about deciding who gets to steer the invisible systems that decide what the world sees next.