Google Smart Glasses: The Next Evolution of Wearable Computing

A New Era of AI-Powered Vision

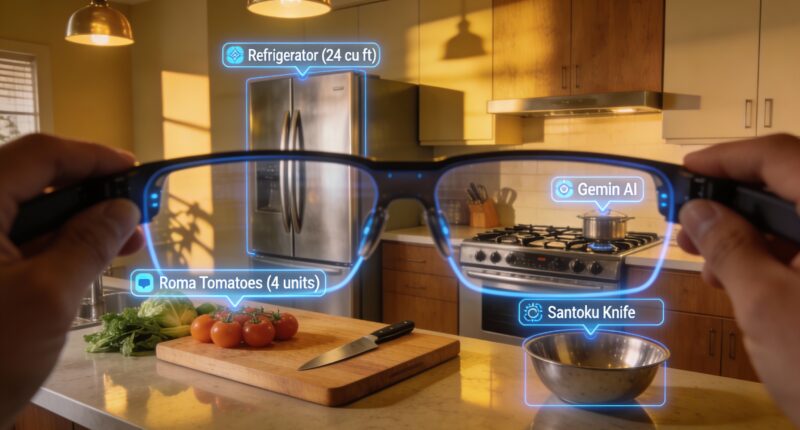

The announcement of the new Google Smart Glasses featuring Gemini marks a significant milestone in wearable computing. Unlike earlier iterations of Google Glass, which were primarily enterprise-oriented and limited in capability, the new generation is designed with core AI-native functionality. Gemini, Google’s multimodal intelligence engine, gives the device the ability to interpret images, video, audio, and text in real time.

This transition from basic optical display to an AI-first architecture places the new Google Smart Glasses in a unique category: an always-available personal AI companion integrated seamlessly into everyday life.

Key Features of the New Google Smart Glasses with Gemini AI

1. Real-Time Visual Understanding

AI-Driven Object Recognition

Gemini can analyze what the user is seeing instantly. Point your gaze at an object, and the glasses can provide contextual metadata: what it is, how it works, where it comes from, or where it can be purchased.

Scene Interpretation

The glasses can interpret environments—store aisles, city streets, documents on a table—transforming the user’s field of view into an interactive layer of understanding.

2. Real-Time Translation

Instant Speech and Text Translation

The glasses can provide:

-

Subtitled real-time speech translation

-

Automatic translation of written text within the user’s view

-

Contextual corrections based on idioms and tone

Multilingual communication becomes frictionless, allowing users to travel, negotiate, and collaborate globally.

3. Hands-Free Querying and Assistance

Voice + Gesture Control

Gemini enables fluid command input through simple phrases such as:

-

“What am I looking at?”

-

“Translate this menu.”

-

“Summarize this document.”

-

“Guide me to the nearest MRT station.”

Gesture or gaze-based triggers allow activation without raising a hand.

4. Navigation and Geospatial Awareness

AR Route Guidance

On-screen overlays provide real-time directions:

-

Walking paths

-

Cycling routes

-

Public transit instructions

-

Indoor navigation (airports, malls, campuses)

The device anchors digital markers directly onto physical surroundings through spatial computing.

5. Real-Time Research and Information Retrieval

Contextual AI Search

Users can look at:

-

A historic landmark

-

A product label

-

A restaurant storefront

Gemini instantly provides insights—reviews, prices, operational hours, details, historical context—without manual typing.

6. Health, Safety, and Accessibility

Supportive Tools for Everyday Functionality

Gemini-powered features enhance accessibility:

-

Object detection for visually impaired users

-

Spoken descriptions of scenes

-

Real-time hazard alerts (steps, obstacles, vehicles)

Additionally, the glasses can integrate health monitoring:

-

Posture feedback

-

Activity tracking

-

Environmental measurements (UV, light, or air quality, depending on sensors)

7. Productivity and Workflow Support

Workplace AI Enhancement

The glasses can support:

-

Live meeting transcription

-

Real-time summarization

-

Workflow reminders

-

Context-aware task management

Enterprise users can benefit from instant data overlays during technical inspections, training sessions, or field operations.

How Gemini AI Transforms the Smart Glasses Experience

Multimodal Intelligence at the Core

Gemini is not merely a large language model. It is a multimodal, real-time reasoning engine capable of processing video, audio, spatial cues, and environmental context. With its integration into smart glasses, the device becomes capable of:

1. Understanding what the user sees

2. Responding with actionable insights

3. Updating continuously as the environment changes

This allows unprecedented AI-assisted situational awareness.

Real-Time Processing: On-Device and Cloud Hybrid

Google is expected to combine lightweight on-device AI models with cloud-based Gemini models depending on task complexity.

Benefits include:

-

Low latency

-

Enhanced privacy

-

Battery efficiency

Critical tasks like object detection or navigation can run locally, while complex tasks like document summarization or advanced translation rely on cloud processing.

Use Cases Across Industries and Daily Life

1. Travel and Navigation

Gemini-powered smart glasses eliminate friction for travelers:

-

Street signs translate automatically

-

Guidance overlays appear on sidewalks

-

Restaurant reviews appear as the user looks around

Exploration becomes more intuitive and visually enhanced.

2. Education and Learning

Students can look at a chemical formula, a mechanical diagram, or a museum artifact, and receive:

-

Definitions

-

Related concepts

-

Explanations

-

3D modeling overlays

Learning becomes interactive, immediate, and visually anchored.

3. Healthcare and Medical Support

Medical professionals can leverage:

-

Hands-free patient chart access

-

Real-time analytics during procedures

-

Visual overlays for scanning equipment

-

On-the-job training aids

AI-enhanced glasses become a second set of eyes for clinicians.

4. Manufacturing, Logistics, and Technical Fields

Workers gain hands-free digital assistance for:

-

Equipment diagnosis

-

Step-by-step repair instructions

-

Inventory management

-

Industrial safety alerts

Productivity and error reduction rise significantly.

5. Accessibility for Users with Impairments

Gemini AI supports users with:

-

Visual impairments (narration of objects and environments)

-

Hearing impairments (live captions and translations)

-

Cognitive challenges (simplified task guidance)

This may represent one of the most meaningful applications of AI wearables.

Design and Hardware Expectations

Lightweight and Comfortable Form Factor

Google has emphasized human-centered design:

-

Lightweight frames

-

Discreet styling

-

Full-day wearability

-

Replaceable lenses

The device aims to blend into daily life, avoiding the conspicuous appearance of VR headsets or bulky AR equipment.

Display and Optics

The glasses are expected to use:

-

Transparent micro-displays

-

Adjustable brightness

-

High contrast for outdoor use

Information appears as subtle overlays rather than full immersive imagery, making them suitable for practical everyday use.

Audio & Microphone System

Bone-conduction or near-field directional speakers may be integrated for:

-

Private audio

-

Clear communication

-

Ambient awareness

Multiple microphones enable noise cancellation and accurate voice capture.

Battery and Performance

Prioritizing efficiency, Google is likely employing:

-

Ultra-low-power chipsets

-

Adaptive refresh rates

-

Auto-dimming displays

Use-case expectations indicate a full day of moderate activity on a single charge.

Competitive Landscape: How Google Smart Glasses Compare to Apple, Meta, and Others

Google vs. Meta (Ray-Ban Meta Smart Glasses)

Meta’s glasses focus primarily on:

-

Social media integration

-

Photography and livestreaming

-

Voice-based AI queries

Google’s glasses, powered by Gemini, emphasize:

-

Real-time visual reasoning

-

Translation

-

Productivity

-

Navigation

-

Ambient computing

The two share similarities, but Google’s proposition is more computationally driven.

Google vs. Apple Vision Pro

Apple Vision Pro focuses on spatial computing and immersive environments.

Google’s glasses:

-

Are lightweight instead of immersive

-

Function primarily as an AI assistant

-

Prioritize utility over entertainment

The two products serve different use cases and customer bases.

Google vs. Emerging AR Players

Companies like XReal, Vuzix, and Magic Leap produce augmented displays.

Google’s differentiation is clear:

Gemini AI makes the glasses more intelligent, contextual, and responsive than any display-first AR system.

Potential Market Impact and Strategic Positioning

Reinforcement of Google’s AI Strategy

The glasses embody Google’s goal to integrate Gemini into every facet of daily life.

This device accelerates:

-

Ambient AI

-

Contextual computing

-

Ubiquitous assistance

By combining hardware and services, Google positions itself against Apple and Meta in the future of AI wearables.

Re-Entry into the Consumer Wearable Market

After the discontinuation of the original Google Glass, this new version:

-

Targets mainstream users

-

Offers clear practical value

-

Benefits from a mature AI infrastructure

The timing aligns with rising consumer readiness for AI wearables.

Impact on Accessibility, Education, and Healthcare

Gemini-powered smart glasses may become essential tools in:

-

Assistive technology

-

Remote education

-

Telemedicine

-

Field diagnostics

Google could secure major institutional and enterprise partnerships.

Challenges and Considerations

Privacy and Security

Smart glasses raise concerns around:

-

Recording

-

Data retention

-

Facial recognition ethics

Google must ensure:

-

Visible recording indicators

-

Strict permissions

-

On-device encryption

-

Transparent data policies

Battery Life Limitations

Advanced AI processing requires significant power.

Balancing functionality with endurance remains a technical challenge.

Social Acceptance and Design

Adoption depends heavily on:

-

Style

-

Comfort

-

Perceived intrusiveness

The design must feel natural enough to wear daily.

Conclusion: A Transformative Step for AI Wearables

The new Google Smart Glasses featuring Gemini AI represent a monumental leap forward in ambient computing. By merging advanced multimodal intelligence with lightweight wearable hardware, Google is creating a tool that can fundamentally reshape productivity, accessibility, travel, communication, and real-world interaction.

Where smartphones require users to look down, smart glasses powered by Gemini empower users to look up—and learn, act, and engage with the world more fluidly. If executed effectively, these glasses may become the first widely adopted AI wearable of the 2030s, defining a new paradigm of human-machine interaction.